Project FAQ

Good news! This is your first fully solo data analysis project, and the first step as you start to walk independently down this thorny path. Although you may face difficulties, you'll find some tips below to help you solve any potential problems.

Tutor Tips

Ok, some of you are done with the theory and exercises and ready to start your project.

Feeling nervous or don't know what to do?

Here are some questions to answer while dealing with a new dataset.

Just follow these steps and it will make the preprocessing sprint easier.

Our tutor Anastasia has prepared some important tips to help you complete your first project

New dataset cheatsheet

- Load all the libraries and the dataset itself

- Use

.info(),.head(),.tail(),.sample()to get a basic understanding of your data.- How many columns does your data have? What about rows? Is data corrupted in some way? Do you see missing or weird values? Are there any explanations for that right now? Do you need to change any data type and why?Here you can change the data type and rename columns, if necessary. You can check the links for additional information.

- Identify the data type and change it:

- Renaming columns

- Use

.describe()to get a statistic summary on your columns.- This will help you identify whether dataset has lots of 0 or weird outliers (like 20 kids). Do these values make sense to you? If not, investigate them!

- You can use

.value_counts()to check the most popular values for any particular column. What are the most popular values in your columns? Do you see any weird patterns? - Here is a nice article that explains how to explore your dataset.

- Missing values. How to identify? Replace or drop?

- This is a short and nice article that explains what kind of missing values we can have in a dataset and how to spot them.

- There are 2 main ways to handle missing values

- Drop missing values This is the easiest way, but keep in mind what you’re dropping and what part of data you are losing. A general rule of thumb is that we can’t drop more than 5%-10% of the data. Before dropping, think twice about the columns and rows. Maybe you’re dropping an entire row for a column that you might not be using at all.

- Replace missing values This is the most common strategy. You can fill the missing values using one specific value (mean or median, for example), using the column itself, other column or even the entire DataFrame! (More details here and here)

- Concerning the missing data, the only problem to keep in mind is which percent of rows you’re replacing with the average value. For example, if your data has 60% of its values missing and you replace all of that with one mean value. How will that affect your analysis?

- Last but not least – dropping duplicates

- It is better to do this at the end, after you’re done with preprocessing, because some delete duplicates will disappear after preprocessing (ex: after you fill in the missing values). On the other hand, some duplicates may appear (ex: when you convert all the values to lowercase).

We've covered the main steps to start you off, and hopefully this will keep you on track and you're beginning your project.

Top 10 tips and tricks based on common mistakes

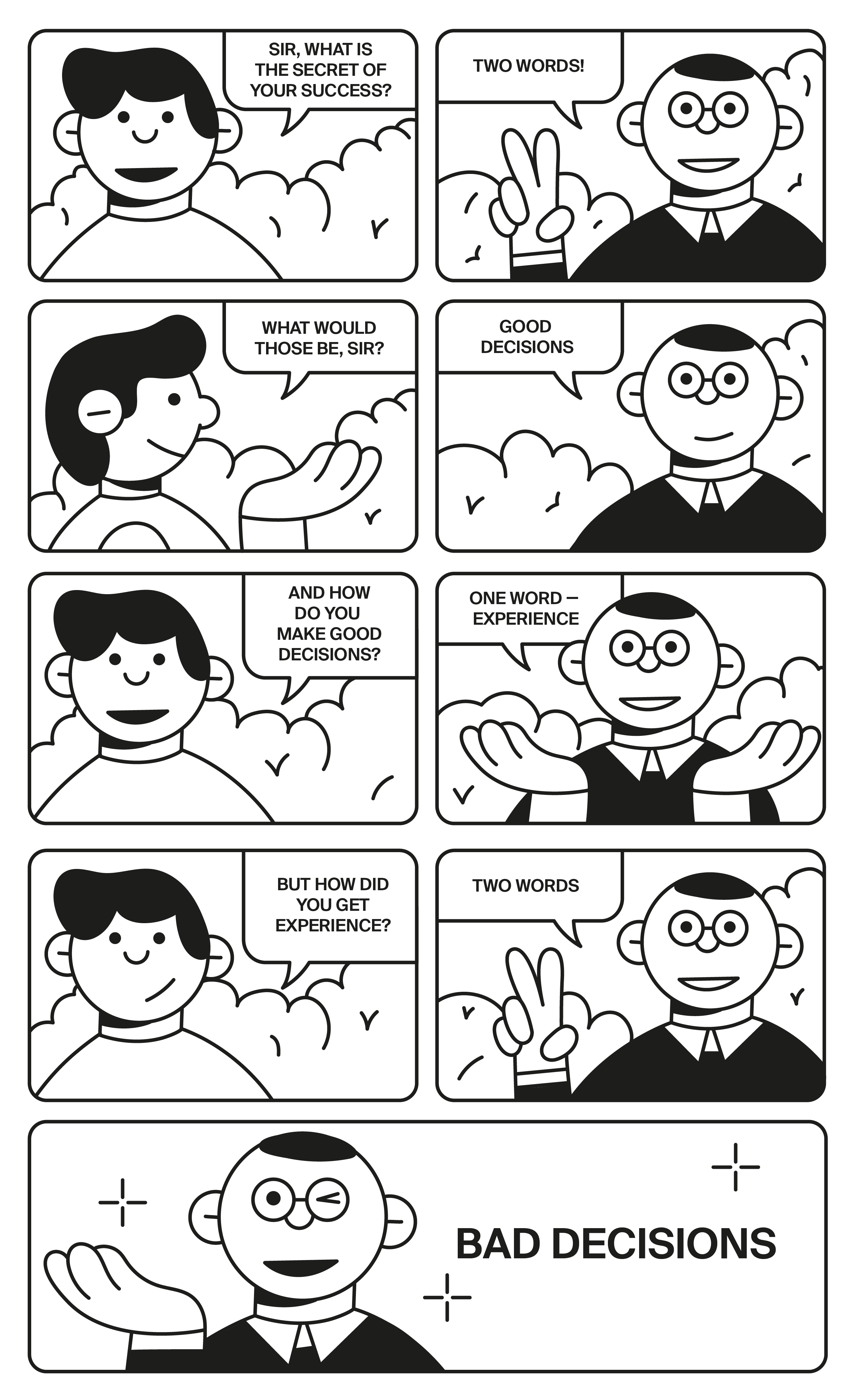

While it's true that we learn from our mistakes, some of them can still be avoided in advance.

If you're stuck on the first project, here are 10 tips based on 10 mistakes that students most frequently make.

- Write conclusions Your projects are not only about your technical skills, but are also about your ability to understand and interpret your data. Your conclusion is the key to develop analytical thinking. It will essentially be impossible for me to assist you if I'm unable to see your conclusions.

- Justify your decisions You may opt for a variety of approaches during data preprocessing, and I am totally fine with that, but please, be ready to justify your decisions. For example, one student can drop the column containing bizarre values because we're not going to use this parameter for further analysis. since we are not using this parameter in the further analysis. But before dropping it, the student should investigate the parameter and will actually prove that the data is actually corrupted in such a way so that it’s easier for us to drop it.

- Show me the code and explain your logic Whenever you do something, please explain it and actually show the output/print the result. The reason for this is because I want to better understand you and your logic. Please don't leave one line of code that unjustifiably replaces all missing income values with the mean. Think about the reason for such a decision.

- Missing values 0's are also missing values in some cases, so please, don’t forget about that. Can we actually have customers whose age is equal to 0?

- Duplicates It is a good practice to check for duplicates and delete them AFTER you’re done with the rest of preprocessing

- Days_employed is trickier than it seems Make sure you pay careful attention and investigate this parameter a little bit further. Just be sure not to completely ignore it because it's not needed.

- Categorization is not about code but about categories Creating dictionaries and categorizing data may get boring. But if you are a little bit curious, you can investigate different categories and their distributions, find out what is unique for a particularly category members and and then develop your theories about data connections. What's the deal with demographics? Dig in a little bit and learn more about these groups and the reasons they might take out a loan. Even though this isn't explicitly stated in the task, I encourage you to play detective a little bit here. Your goal isn't to find which group contains the most people with unpaid debt. You need to find the default-rate.

- Absolute values mean nothing

- General conclusion Your general conclusion should describe the research that you’ve done: the data you’ve been working with, its distribution, as well as the main points from previous conclusions and answers to the research questions.

- Final recommendation You're an analyst now! The bank needs your recommendations about their loan system and your suggestions for how to upgrade the system.

I hope these tips will help you as you conquer this project!

Useful links

Hitting a wall with preprocessing? Here are some links to help you tackle the first sprint's toughest topics:

- A nice guide to the

print()function - How to create and use masks

- How to deal with missing values

We know that working on projects independently is hard, but we believe in all of you!

Good luck!

Project Formatting

For a Data Analyst, solving a problem and making code work is a good thing. It's even better if your work is easy to understand, the purpose is clear, and the team is easily able to reuse your solution.

To do this, you'll need to correctly format your work so that other people know exactly what it does.

The top 3 hints for formatting your project

- Use the structure we have prepared in our Jupyter Hub

If you want to add sections, please use the Markdown type of cells. You can change the cell type on the toolbar (the default is Code).

To properly structure the section heading and write conclusions, check out the markdown formatting hints at the bottom of our guide:

- Using our Jupiter Hub is preferred

In the life of a programmer, it's common to face incompatibility problems between various library versions. In order to avoid being distracted by this difficulties at the outset of your journey, we've already set everything up for you in our Jupiter Hub, so we recommend you work there, and not on your local machine.

- Do not delete reviewer comments

Please don't delete comments that reviewers have written.

When they check your code for the second time, it will be much easier for the reviewer to track your progress in specific places without analyzing the entire project again.

FAQ

If you have any type of questions about the project, first of all, do not worry, it is absolutely fine!

Probably the next thing you should do is to read the project description once again really carefully (and specifically the part related to the question) and find the corresponding lesson to refresh the knowledge. Below we provided some additional information that you might find useful.

General

How to find answers for programming questions? We could give you a direct answer, but that wouldn't be fair, would it? First of all, did you try googling? As an aspiring programmer, the first and most important skill you'll need to learn is how to properly use a search engine and parse your query results. For example, if you are interested in some pandas functionality, e.g. a search for"pandas drop duplicates". This query will result in a number of pages, some of which will lead to the official pandas manuals. Others will be links to the Stackoverflow forums. Though, honestly, in these cases, manuals are probably more useful. That's because they are generally more general in purpose. Stackoverflow posts, on the other hand, are usually concerned with answering a particular question which might not apply to your situation.

If you'll still have some questions after that — find them here or feel free to ask in slack.How to write a good conclusion? To contrast, for TripleTen projects, reports should contain more details so the reviewers are able to fully understand the depth and quality of your work. More details will tend to lead to richer reviewer feedback — however, try to strike a balance and don't overdo the details! It's probably overkill to properly fill-in every section of that structure for your projects' reports but free to design your own based on that outline. For a project, a good report would be roughly one page/large screen consisting of structured text with references back to key sections in your project. I know of at least two sources that you might want to read. Both of the following can advise you when creating a statistical consulting report:

What is the "pythonic" approach for data analysis? I don't think there are any strict guidelines on this. Most of the time in data analysis you're not restricted by memory or CPU usage. Therefore, in my opinion, your main concern should be to organize your code in such way that you make the least possible amount of mistakes. i.e. use whatever you feel is most comfortable for you. That, of course, doesn't mean you should use a for loop to iterate through a dataset with 10 million values just because you can, but creating 10 additional dataframes definitely shouldn't be a major concern. By the way, there is a curious Easter egg in Python, the so called "Zen of Python", which one can summon by executing "import this" (or simply by googling). Even though it's a joke, it still has some good thoughts on the matter and is very much worth reading. There are pythonic ways of doing things. A starting point is to read the above-mentioned The Zen of Python (https://www.python.org/dev/peps/pep-0020/). It boils down how to code in a simple and straightforward way, while also using the unique features of Python as much as possible. You can try to read the Google Python Style Guide (https://google.github.io/styleguide/pyguide.html) to get a more detailed view on it. I have not really seen any good code style for DA/DS-specific scripts. But if we assume they usually heavily rely on numpy, pandas, seaborn and matplotlib, one can check their respective code examples for those libraries i.e.

Examples for numpy/pandas from the book of Wes McKinney (one of core developers of pandas): https://github.com/wesm/pydata-book

Examples for matplotlib from the documentation: https://matplotlib.org/gallery/index.html

Examples for seaborn from the documentation: http://seaborn.pydata.org/tutorial.html

I believe each analyst gradually develops their own style over time. As long as your notebook can easily be understood by your colleagues, your style is great. As to the specifics in the first two messages, I'd say: 1. Do not create too many dataframes with individual names. In this case you can get confused in names. It might be better just to copy subsets. Or be really good with their naming, and develop some kind of system for naming variables. 2. Subsetting operations and functions like [], query() may return a view of a dataframe, not a copy. This is fine unless you try to update that view, there might be relevant warnings and you might actually update the original dataframe (which is frequently not what you were going to do). If you need to 'separate' that view from the original operation, use the copy function. It will create a subset as a completely separate data frame.

How to deal with numbers that look like 8.437673e+05? Writing a number using the 'e' sign is called Scientific notation. Such notation is convenient for representing very large or very small numbers. For example, numbers written in this way can often be found in scientific papers. To convert numbers to normal view in Jupyter notebook, you need to change the pandas settings for displaying dataframes using the following code:

1pd.set_option('display.float_format', lambda x: '%.3f' % x)Now all numbers will be displayed rounded to the 3rd decimal place.

Imperfect data

What should I do with negatives in column

'days_employed'? Real data often has a lot of trash, outliers and so on. This can happen due to database errors, as a result of how data was collected, or a number of other possible reasons. The best way to deal with this is to ask a customer about what happened with the data, and what any strange values might mean. Unfortunately, sometimes we don't have this option and we have to sort through the trash ourselves. Such is the case we have here. In regards to thedays_employedcolumn, you'll need to suggest the most likely hypothesis about these negative values, then fix them according to that hypothesis. Don't forget to explain to the reviewer all your finds before going to the next tasks.What is an appropriate rule to fill in missing data (e.g. for

'total_income')? In its simplest form, it can be just a typical value (the median, the average) for the whole population. On a more granular level, it can be a typical value per each distinct group in a population. That last one is the more sophisticated one. For'total_income'a trivial mean (or median) replacement is far from the optimal choice. I'd recommend you grouping the values based on, for example, botheducationandincome_type, and then replacing the missing values with the medium calculated for each resultant group. You can safely expect that an entrepreneur earns more than a student, for example. Or, that an employee with a higher level of education (like a lawyer) earns more than an employee with a basic education (like a janitor). Such a careful and detailed approach to data preprocessing will help you draw better conclusions and build a higher scored model in the future. How to deal with it technically: - To fill in missing values with a constant you can use the.fillna(<value>)method for a column. - For the case with separate groups it is not so straightforward. You shouldn't try to update a dataframe while iterating over it. But you can use groupby/transform functions. You can find how to do it here https://stackoverflow.com/questions/41680089/how-to-fillna-by-groupby-outputs-in-pandas Also, you can find a large number of examples of so-called split-apply-combine approach in pandas docs https://pandas.pydata.org/pandas-docs/stable/user_guide/groupby.htmlIn terms of duplicated values (e.g. in the

'education'column) is it advisable to delete them or combine them? If you leave this column as is, it can bring technical problems later. In case of de-duplication, more efforts are spent during the preprocessing phase but you are typically rewarded later, during the analysis, because of less technical hassle. So, I'd say it makes sense to skip de-duplication if you are not planning to factor this variable into your analysis, otherwise it's better to treat the data during the earlier stages to make subsequent steps easier. In any case, try to support your decision with comments. It will help both you and the reviewer keep track of the logic flow in your project.How to deal with large values (e.g. 20 children)? These strange values in data are called outliers. By definition, outliers are extreme values in the input data that are far beyond other observations. They often occur as a result of human error during data recording, or due to other technical issues. Outliners may also be correct, but they might be too different from the rest of the data, e.g. income 15 minutes more than the median of the entire sample. No matter the origin of outliers, since the presence of such values can distort our conclusions from the data or the predictions of the machine learning model, it is worth working with them.

- Errors in data that cannot be understood can be regarded as missing. They can be replaced by a simple average or median, or these values can be calculated within some groups.

- Incorrect negative values can be replaced with absolute values.

- For values in the sample that are too large or too small, you can use the winsorization method: replace these values with a quantile, for example, the value 0.9. Method .quantile(). More information here https://en.wikipedia.org/wiki/Winsorizing

As always, don't forget to comment your steps and explain all of your findings to the reviewer.

Categorizing data

What columns should be categorized? There is a hint in the project description that includes the variables that can be categorized to ease the analysis. If you read step 3, there are questions referring to different variables. Use categories at least for those. However, feel free to analyze the dependency of the default rate on the other variables.

How to categorize

'purpose'column? The**'purpose'**column contains text, so it should be preprocessed the proper way to turn it into features. Here are some options:- You can write a function which only takes one string argument (purpose) and return a stemmed representation of this string (stemmed purpose). More about python functions here https://www.w3schools.com/python/python_functions.asp

- Use the .apply() method to create new stemmed

**purpose**column with your function. - Look at unique stemmed purposes to decide which categories are better to create. The .unique() method is good for that.

- Write another function that gets only one string argument (stemmed purpose) and returns the category of this purpose. The function should only consist of several if-statements. To find values in string use the string method .find(). More about its usage here: https://www.w3schools.com/python/ref_string_find.asp

- Again, use the .apply() method with your new function to create new purpose category column.

If you need some help, do not hesitate to ask in the cohort_#_projects channel.